Posts Archive

- Post 1

- Post 2

- Post 3

- Post 4

Digital Cultural Heritage Link Roundup Autumn 2025

2025-11-15

Another collection of links on digital cultural heritage topics from the last few months, mainly for my own benefit but possibly of interest to others as well.

(I started on this in the Spring but never finished writing it. Posting now so I can move onto a links catchup post for Spring 2026 now and maybe actually post it on time...)

AI and Humanities

Drimmer, S. (2025) ‘MACHINE YEARNING’, Artforum, 1 April. Available at: https://www.artforum.com/features/generative-ai-structure-of-feeling-1234728310/ (Accessed: 12 April 2025).

Burnett, D.G. (2025) ‘Will the Humanities Survive Artificial Intelligence?’, The New Yorker, 26 April. Available at: https://www.newyorker.com/culture/the-weekend-essay/will-the-humanities-survive-artificial-intelligence (Accessed: 28 April 2025).

The DeepSeek Series: A Technical Overview (no date) martinfowler.com. Available at: https://martinfowler.com/articles/deepseek-papers.html (Accessed: 30 April 2025).

Fittschen, E. et al. (2025) ‘Pretraining Language Models for Diachronic Linguistic Change Discovery’. arXiv. Available at: https://doi.org/10.48550/arXiv.2504.05523. [AKA - Text generation from historical time periods - https://huggingface.co/collections/Hplm/pretrained-historical-models-67fd13f6827793785525a095]

AI Models

Langlais, P.-C. et al. (2025) ‘Even Small Reasoners Should Quote Their Sources: Introducing the Pleias-RAG Model Family’. arXiv. Available at: https://doi.org/10.48550/arXiv.2504.18225.

Data Visualisation

Mei, X. et al. (2025) ‘ZuantuSet: A Collection of Historical Chinese Visualizations and Illustrations’, in Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems, pp. 1–15. Available at: https://doi.org/10.1145/3706598.3713276.

Environment

Green DiSC: a Digital Sustainability Certification | Software Sustainability Institute (no date). Available at: https://www.software.ac.uk/GreenDiSC (Accessed: 30 April 2025).

Funding

‘On the NEH and Our Path Forward – Knowledge Commons’ (2025), 17 April. Available at: https://about.hcommons.org/2025/04/17/on-the-neh-and-our-path-forward/ (Accessed: 30 April 2025).

Rich Interlinked Text Specification (proposal)

2025-06-07

I think this is an issue unresolved in all cultural heritage collection websites (but please let me know if otherwise) and yet it's such a small basic web feature - the ability to link to other object pages in a collection from the text within an object page. For example in the V&A collection a record's description might say:

This design is closely related to those of contemporary orange-houses, with six bays in each range. It relates closely to Plan 2 (E.419-1951).

(an example V&A object)

But that reference (E.419-1951) to the other object in the V&A's collection is not helpfully linked through to the other object page, so the user would have to start a new search in the collection site to find it, which seems a poor experience. Of course there are usually other fields in the record where direct links between objects (or other relationships such as to controlled vocabularies terms for faceted search) can be generated, so why not within the text?

Essentially it's a systems pipeline problem (I feel sure there is a much better word to describe this situation, please let me know!). The collection management system where curators and cataloguers are writing the object information is often not the same system where the records are made available as web pages, instead the data from one system is passed on and transformed into HTML by a second system. This means the different fields in the object record need to be transformed into HTML, and this is handled depending on the type of the field - which are mainly free-text fields or controlled vocabulary fields (with other minor variants I'm ignoring for this post). If it's a controlled vocabulary field, based on knowing:

- the field type (that it is a controlled vocabulary managed field)

- the field meaning (e.g. is it a controlled vocabulary for materials, or places, or artist/makers, etc)

- the field value (as the controlled vocabulary identifier, e.g. AAT300045514)

- the field value (as the controlled vocabulary displayable name, e.g. Jet)

when generating the HTML for this field, we can show the displayable name ("Jet") in the page of course, but we can also generate the URL to link to a faceted search for that material (or other controlled vocabulary term). The URL will vary depending on the website's site and architecture but it would be something along the lines of:

/search?[controlled vocabulary]=[controlled vocabulary term identifier]

or less abstractly, for the V&A Collection site:

/search?id_material=AAT300045514

So then the user doesn't have to initiate the search themselves (and avoids the user doing a gengeral text search for 'Jet' which would return objects using the word in multiple meanings, Jet engine, Jet set, etc.

But for free text fields the situation is different, going back to the first example:

This design is closely related to those of contemporary orange-houses, with six bays in each range. It relates closely to Plan 2 (E.419-1951).

There is no information that can be used to generate a URL automatically from the quoted museum accession number appearing within the text, primarily because we don't even know it is a museum accession number. We could write some regular expression rules to try to identify this, but (certainly for the V&A) there are many different ways these numbers can be written, sometimes just appearing as a single number, so we would likely create many erroneous links if we turned every instance of a number in a free-text field into a link to an object page.

Also, somewhat unpleasingly to a tidy mind, even if we did turn the museum accession number into a link, we can't actually link directly to the object page, we would just have to link to a text search for it, as our URLs use the collection management system identifier for object records, but the museum accesion number for the physical object (for reasons too long to get into in this already too long post). So the link would just be:

/search?q=E.419-1951

rather than:

/item/O195744

which again would seem less than ideal (although admittedly not too terrible a crime, as presumably the search would return the object as well, but with one extra click for the user each time to reach it).

Bad proposal

A simple solution would be for curators and cataloguers to enter the URL for the related object into the object record directly like so:

<a href="http://example.org/object/O1234">

while this works, it's hardcoding into the object record information about a different system (the website) which may change over time, which might cause the URLs to break (obivously this should not happen, and redirects should be put into place to handle this - but still, it seems bad to hardcode architectural assumptions about one system into another system). It also requires the user to write some more HTML tags correctly by hand (unless a Collection Management System vendor implements it in their text editor to handle this) which creates the risk of tags not being closed, breaking the object page.

Better(?) Proposal

Fundamentally the issue then is that some (system architecture?) knowledge is not passed on between the two systems in the pipeline, knowledge which would tell the object page generater system how it could handle museum accession numbers within free text in some better way. To try to avoid creating some complex new standard for resolving this, and because HTML typographic tags are often used to pass on instructions around how text should be shown (e.g. for italics or bold) a proposal would be the use of the <data> HTML tag:

"The <data> HTML element links a given piece of content with a machine-readable translation."

(to quote from MDN - https://developer.mozilla.org/en-US/docs/Web/HTML/Reference/Elements/data)

so that feels a reasonable usage that we could including the knowledge of the object record identifier (for generating the URL) alongside the museum accession number (for humans to read). The free-text field would then be written as:

<data value="O1234">A.123</data>

Which would have no impact on a page generation system that doesn't handle it (as the data element doesn't alter the text's presentation - although potentially the whole HTML could appear in raw form as above if HTML tags are not parsed/removed). But for an object page generation system that did know how to handle this, it could turn this into a direct link into the associated object record.

Admittedly, this has the same issues as writing in the HTML link directly, that is it requires the curator/cataloguer to type in a HTML tag correctly (unless the collection management system vendor implements something in the text editor to insert them for the user), but this time they are not hardcoding in a URL for another system, instead they are just putting in the identifier for the corresponding object record in the same system they are writing the current object record.

Extending further

Taking this further, the data element could also have some custom attributes (using data-) which allows for a variety of different links to be generated in the page building system, for example:

- links to controlled identifier faceted search - perhaps data-controlled-field="materials" to indicate a link to the materials facet)

- links to other cataloguing systems such as the library or archive catalogue

- links to map co-ordinates

- links to other institutions systems (but then how do we know how to build the right URL for the other institution?)

But perhaps that is something that needs more standardisation, to avoid a mess of different custom attributes across systems. Possibly an area for an organisation like Collections Trust to take up? (because there are no other problems around of course!)

Mistral AI Illustrations Detection Tests

2025-03-18

Mistral OCR test on National Art Library collections from C11-20th

Following on from the announcement about Mistral's OCR model, I thought I would (unfairly!) test it against some examples of manuscripts and books from each century from the 11th to the 20th; to see how well it can recognise illustrations amongst text (or vice-versa). All examples taken from the National Art Library at the V&A.

First some setup (all taken from Mistral's examples - https://docs.mistral.ai/capabilities/document/

import base64 import requests import os from dotenv import load_dotenv from mistralai import Mistral from mistralai.models import OCRResponse from IPython.display import Markdown, display load_dotenv() # take environment variables/config for API key api_key = os.environ["MISTRAL_API_KEY"] client = Mistral(api_key=api_key) def replace_images_in_markdown(markdown_str: str, images_dict: dict) -> str: for img_name, base64_str in images_dict.items(): markdown_str = markdown_str.replace(f"", f"") return markdown_str def get_combined_markdown(ocr_response: OCRResponse) -> str: markdowns: list[str] = [] for page in ocr_response.pages: image_data = {} for img in page.images: image_data[img.id] = img.image_base64 markdowns.append(replace_images_in_markdown(page.markdown, image_data)) return "\n\n".join(markdowns)

11th Century

Part of a leaf from a Lectionary

ca.1075-1100

https://collections.vam.ac.uk/item/O1754458/part-of-a-leaf-from-manuscript/

img_response = client.ocr.process( model="mistral-ocr-latest", include_image_base64=True, document={ "type": "image_url", "image_url": f"https://framemark.vam.ac.uk/collections/2010EB1329/full/full/0/default.jpg" } ) display(Markdown(get_combined_markdown(img_response)))

miguem mit ratur. quax do quifgrp

dif poft mortem gebenne. concr-mata

o muentr. quidum u uerer. fructum

operaf facere recufunt. Ingemmat

dif dnf bonarum malarumqo arborum

monem cum adbuc fubtungtr dicenf.

r. ex fructbuf coy cognof ceafcof. (a) u

enum fructu{ inquibamide arboref abonuf

trnurtur. pauluf apti oftendtr dicenf.

seta aut funt operacarnuf. que funt. for

nonef. unmundrace. inpudicrue. luxu

idolorum feruruf. Yueneficue. inmuet

contertionef. enultrionef. ux. tixe.

onfionef. fecte. muidue. homicidur.

retatef. commef fationef. er fuf fimilu

dia quaguate regnum di nonconfequent.

ulubuf per bucremum ppfium dicetur.

dicauf bomo quconfidur in bomune. erpo

carnem brachum fuum. eradrio recedtr

cuuf. Frit enim quafi murice indeferto.

on uadebir cum uenertr boni. fedbabi

tr inflectare infiltudine intera fal.

quuf erint. bals. Xruen boni arbor

dacf. uctuf pfece. ad-

quamfidur indrio

qun unufelt df. beneficuf. er demonef tre-

munt. ercontremefcunt. (a) uof dnif per

ppfium rephut dicenf. Populuf bielabuf

mehonort corautè coy longe ême. Talef

tempore uudictr. quia fine fructu boni

operif clamabunt dirc.dric apernobuf.

uidure mereburtur. amendico uobif nefio

uof. (a) uergo uulintrare unreguit ceton.

nonfolum fare defiderer quid ucltr df. (a) ue

fed eram unplere. quod uber di. (a) ue

ficut dnif ut inguglio. Betuqui audtunt

uerbum di er cuftodunt illud. Et tim di

fepuluf. Beztertaf fifecertafca.

1552

DON. X. PIPENTEOSIEN.

ARABOLF SAEo monuf filu daud regifift. Adfaenda fapicrtam erdafea plinam. adtratlegrenda uerba prudertue erfu fapicendum eruditionem doctrine. ufftrum. eruducui. eracq crtem ut decur piruuluf aftetarido lefecmabuf unellectuf. Audienf fars

Evaluation

The handwriting recognition is plausibly wrong, but it does impressively partially detect the initial within the text (compare with later illuminated manuscript tests where this separation doesn't happen - not sure why the model picks up on this one works and not the others)

12th Century

Initial from Gratian's Decretum

1160-1165

img_response = client.ocr.process( model="mistral-ocr-latest", include_image_base64=True, document={ "type": "image_url", "image_url": f"https://framemark.vam.ac.uk/collections/2009CP6384/full/full/0/default.jpg" } ) display(Markdown(get_combined_markdown(img_response)))

Evaluation

The whole page/cutting is recognised as an illustration, but the text is not recognised (perhaps Mistral doesn't recognise latin?) and the initial is not recognised as a seperate illustration.

13th Century

Glazier-Rylands Bible Manuscript Cutting

ca.1260-1270

https://collections.vam.ac.uk/item/O130464/glazier-rylands-bible-manuscript-cutting-unknown/

Two columns of text with initial and marginal bar

img_response = client.ocr.process( model="mistral-ocr-latest", include_image_base64=True, document={ "type": "image_url", "image_url": f"https://framemark.vam.ac.uk/collections/2006BC3631/full/full/0/default.jpg" } ) display(Markdown(get_combined_markdown(img_response)))

Evaluation

The page is recognised a single illustration but no text is recognised and the initial (and marginal bar) are not recognised as seperate illustrations

14th Century

Manuscript Cutting

https://collections.vam.ac.uk/item/O1262522/manuscript-cutting/

A page from an illuminated manuscript with five initials, text and a decorated column

2013GJ1058

img_response = client.ocr.process( model="mistral-ocr-latest", include_image_base64=True, document={ "type": "image_url", "image_url": f"https://framemark.vam.ac.uk/collections/2013GJ1058/full/full/0/default.jpg" } ) display(Markdown(get_combined_markdown(img_response)))

Evaluation

The whole page is recognised as an image but otherwise no text is recognised and the individual images of the initials and the decorative column are not split out.

15th Century

Melusina (1481)

A woodcut with an illustration at the top and text underneath with an initial.

https://collections.vam.ac.uk/item/O115385/melusina-woodcut-pr%C3%BCss-johann/

img_response = client.ocr.process( model="mistral-ocr-latest", include_image_base64=True, document={ "type": "image_url", "image_url": f"https://framemark.vam.ac.uk/collections/2006AA4119/full/full/0/default.jpg" } ) display(Markdown(get_combined_markdown(img_response)))

Evaluation

The whole page is recognised as an image, with none of the text at the bottom recognised, possibly due to the font? The initial is not recognised as an illustration of its own.

16th Century

Book

1554

An illustration with text underneath

img_response = client.ocr.process( model="mistral-ocr-latest", include_image_base64=True, document={ "type": "image_url", "image_url": f"https://framemark.vam.ac.uk/collections/2011FD8917/full/full/0/default.jpg" } ) display(Markdown(get_combined_markdown(img_response)))

que de crinin alla Aubin Pumme, e delle, su uide, pili si poi armino siluide, uide

gambistimo yiddice. su, in pio, yomo siluodile, uo si pumino di petto sfumino, olle

gelo, e fellissimo, nicbo, anche lui, si poi fuo il rumio dapplèo o si miltese d'oro.

Evaluation

The text is rather off, but the illustration is well seperated out. But given this one is rather easier to split the text and the illustration, lets try a much harder page from the same book...

## Book <https://collections.vam.ac.uk/item/O1025805/book-filippo-orso/>

img_response = client.ocr.process( model="mistral-ocr-latest", include_image_base64=True, document={ "type": "image_url", "image_url": f"https://framemark.vam.ac.uk/collections/2011FD8898/full/full/0/default.jpg" } ) display(Markdown(get_combined_markdown(img_response)))

Evaluation

This time the whole page is taken as an illustration , which is reasonable enough, but oddly none of the surrounding text (even the caption at the bottom of the page) is recognised as text.

17th Century

1673-74 - Les plaisirs de l'Isle enchantée

Illustration at the top of the page and within the printed text at the bottom of the page

https://collections.vam.ac.uk/item/O1637296/les-plaisirs-de-lisle-enchantee-book/

img_response = client.ocr.process( model="mistral-ocr-latest", include_image_base64=True, document={ "type": "image_url", "image_url": f"https://framemark.vam.ac.uk/collections/2021NA1039/full/full/0/default.jpg" } ) display(Markdown(get_combined_markdown(img_response)))

LES PLAISIRS

D E L'ISLE

ENCHANTÉE.

COURSE DE BAGUE:

COLLATION ORNÉE DE MACHINES; COMEDIE, MESLÉE DE DANSE

ET DEMUSIQUE;

BALLET DU PALAIS DALCINE;

FEU D'ARTIFICE:

ET AUTRES FESTES GALANTES

ET MAGNIFIQUES,

FAITES PAR LE ROY A VERSAILLES,

LE VIL MAY M. DC. LXIV.

ET CONTINUÉES PLUSIEURS AUTRES JOURS.

E ROY, voulant donner aux Reines \& à toute fa Cour le plaifir de quelques Fettes peu communes, dans un lieu orné de tous les agrémens qui peuvent faire admirer une Maifon de Campagne, choifit Verfailles, à quatre lieuës de Paris. C'eft un Chafteau qu'on peut nommer un Palais enchanté, tant les ajuttemens de A ij

Evaluation

A very successful result with the perfect seperation of text and illustrations; the text all seems correct.

18th Century

1708-1710 - Aesop's Fables

A printed copy of Aesop's Fables with hand-drawn illustrations by the owner similiar to illuminated manuscripts

https://collections.vam.ac.uk/item/O1719295/the-tenterden-aesop-book-lestrange-roger-sir/

img_response = client.ocr.process( model="mistral-ocr-latest", include_image_base64=True, document={ "type": "image_url", "image_url": f"https://framemark.vam.ac.uk/collections/2015HJ8363/full/full/0/default.jpg" } ) display(Markdown(get_combined_markdown(img_response)))

80 Esop's Fables.

And this Caution holds good in all the Bufinefs of a Sober Man's Life ; as Marriage, Studies, Pleafures, Society, Commerce, and'the like ; Tis in fome fort, with Friends (Pardon the Coarfenefs of the Illuftration) as it is with Dogs in Comples. They fhould be of the Same Size, and Humour, and that which pleafes the One fhould Pleafe the Other; But if they Draw Several Ways, and if One be too Strong for T other, they'l be really to Hang themfelves upon every Gate or Stile they come at. This is the Moral of the Friendthip betwixt a Thrufo and a Sinalow, that can never Live together.

FAB. 66. A fouler and a 19 igron.

S a Country Fellow was making a Shoot at a Pigeon, he trod upon a Snake that bit him by the Leg. The Surprize startled him, and away flew the Bird.

The Morat.

We are to Diftinguilh betwixt the Benefes of Good Will, and thefe of Providence: For the Latter are immediately from Heaven, where no Human Intention Intervenes.

REFLEXION.

THE Mifchief that we Meditate to Others, falls commonly upon our Own Heads, and Ends in a Judgment, as well as a Difappointment. Take it Another Way, and it may ferve to Mind us how Happily People are Diverted many Times from the Execution of a Malicious Defigu, by the Grace and Goodnefs of a Preventing Providence. A Piftol's not taking Fire may fave the Life of a Good Man; and the Innocent Pigeon had Dy'd, if the Spiteful Snake had not broken the Fowler's Aim: That is to fay ; Good may be drawn out of Evil, and a Body's Life may be Sav'd without having any Obligation to his Preferver.

FA B. 67. A Crumpeter Taken Prifoner.

Evaluation

The text is almost perfectly recognised (Crumpeter rather than Trumpeter is an amusing error though). The illustration at the bottom of the page is well recognised, although the smaller illustrations in the margin have been ignored.

Nouvelles Cartes de la République Française

1793

French text and card designs in a grid with captions (below and on the side) with some text as well within each card design.

https://collections.vam.ac.uk/item/O126731/nouvelles-cartes-de-la-r%C3%A9publique-print-jean-d%C3%A9mosth%C3%A8ne-dugourc/

img_response = client.ocr.process( model="mistral-ocr-latest", include_image_base64=True, document={ "type": "image_url", "image_url": f"https://framemark.vam.ac.uk/collections/2013GU7329/full/full/0/default.jpg" } ) display(Markdown(get_combined_markdown(img_response)))

NOUVELLES CARTES DE LA RÉPUBLIQUE FRANÇAISE. PLUS DE ROIS, DE DAMES, DE VALETS; LE GÉNIE, LA LIBERTÉ, L'ÉGALITÉ LES REMPIACENT, LA LOI $A B U B B S T A V-D E S S U S D^{\prime} B U X$.

Si les vrais seuls de la philosophie et de l'humanité ont concrqués avec plaisir, parmi les types de l'Égalité, le Etern-Calore et le Migne; ils alimentent non-motif le voir LA LOI, SEULE SOTTENAIRE N'UN FRUfLE STERE, coribement L'Âs de sa suprême puissance; dont les faissances sont l'Europe, et lui donnent son acris. On doit donc dire; Quatorze ou Lue, les Grets, les Libérés ou l'Égalite; en lieu de Quatorze d'Âs, de Bois, de Dames ou de Valois; et Diexayildes, Seialdnes, Quinte, Quartieme ou Tierce ou GèPre, à la Libérée ou à l'Égalite; en lieu de les nommer ou Roi, à la Deme ou en Voir : LA LOI donne seule la désomination de Mahone.

Ann Jean où les Voiets de Truffle ou de Coup ont une valeur particulières, comme un Hecency ou à la Miracle, il faut substituer L'ÉGALITE DE DEVORA ou celle de DANTE.

Evaluation

The French text at the top has been perfectly recognised, the illustration is reconised as one whole image which is understandable although it would be even better if there was some way to instruct it to break the image up into the component squares of the grid. The caption text in the illustration is ignored.

C19th Century

1883 - Histoire des quatre fils Aymon : très nobles et très vaillans chevaliers

A double-page spread with text inbetween and top of illustrations and patterns

https://collections.vam.ac.uk/item/O1744376/histoire-des-quatre-fils-aymon-book-gillot-charles/

img_response = client.ocr.process( model="mistral-ocr-latest", include_image_base64=True, document={ "type": "image_url", "image_url": f"https://framemark.vam.ac.uk/collections/2006AM9812/full/full/0/default.jpg" } ) display(Markdown(get_combined_markdown(img_response)))

Evaluation

A little unfair to give a double-page spread perhaps, it does recognise at least the two different pages but apart from that no text is recognised.

C20th Century

Cautionary Tales for Children by Hilarie Belloc

https://collections.vam.ac.uk/item/O1370845/cautionary-tales-for-children--book-belloc-hilaire/

Illustrations in-between printed text

img_response = client.ocr.process( model="mistral-ocr-latest", include_image_base64=True, document={ "type": "image_url", "image_url": f"https://framemark.vam.ac.uk/collections/2016JL3856/full/full/0/default.jpg" } ) display(Markdown(get_combined_markdown(img_response)))

Jim, Who ran away from his Nurse, and was eaten by a Lion.

There was a Boy whose name was Jim; His Friends were very good to him. They gave him Tea, and Cakes, and Jam, And slices of delicious Ham, And Chocolate with pink inside, And little Tricycles to ride, And

Evaluation

The text is perfectly recognised, but the illustration of the lion has been ignored for some reason.

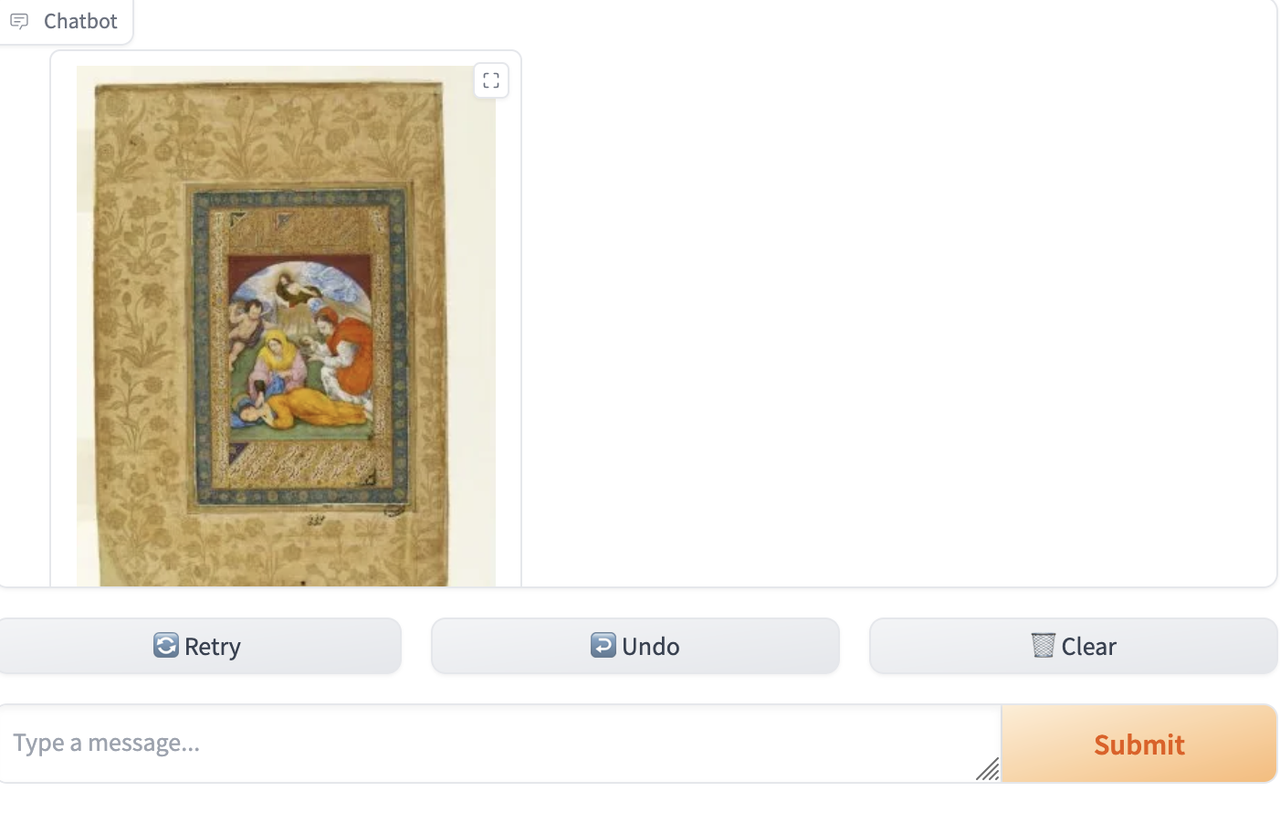

Conversational Search for Cultural Heritage part 2

2025-02-09

I'd called the previous blog post 'Conversational Search for Cultural Heritage' but the implementation in it was not really much of a conversation, as I was just sending individual queries/prompts one at a time to the LLM with RAG, with no real history between the queries. So I wanted to make good on my claim for "conversational search" by developing this more.

I've had to combine a few more tutorials for this and also am using the Gradio interface tools:

Gradio - https://www.gradio.app/guides/creating-a-chatbot-fast

https://python.langchain.com/docs/tutorials/qa_chat_history/ - Adding in chat history

https://python.langchain.com/docs/how_to/qa_sources/#conversational-rag - maintain RAG response between queries

(the latter took a long time to work out, and I'm still not sure if it's workingcorrectly or if it's sending a query to the vector search every time which is slowing down every response).

I also wanted to make use of the DeepSeek model, given it's the model of the moment (and it would let me move the LLM querying to a local query instead of being API rate limited as the Mistral one I was using before was). But at the moment via Ollama it doesn't support tooling, hopefully this will be resolved soon, so I switched instead to using Qwen2.5 (7B) via Ollama. This stopped me hitting rate limits, but I don't have a fast computer/GPU so it's slow to get any response (hence the recording of the chat is not real-time, as it took about 2 mins to ask/answer 3 questions!).

I added into the LLM prompt some special instructions for handling requests for images, telling it to return the image asset ID only, which I can then check for (as it's a fixed format) and instead of returning text, switch to returning the image instead (using the V&A IIIF image API).

I also switched the dataset I'm using from 100 dog related objects to 100 objects depicting saints, hence the query below about Saint Cecilia.

With all that done, this allowed me to get conversations like this:

(note - there are actually two objects depicting Saint Cecilia in the 100 objects dataset, but the vector search only returns one for some reason, so the LLM is correct given the data it receives)

I think this is getting towards a slighty more conversational search that might be of some interest to some users wanting to explore a collection. But the restrictions I noted in the first blog post remain, the RAG approach limits the amount of knowledge that can be sent to the LLM so this (for the moment) would suit subsets of the collection rather than the whole. I'm going to think about what different types of collection search/discovery and AI techniques might be useful in the next post.

Notebook (tided up a lot): Conversation RAG Search 02

Conversational Search for Cultural Heritage

2025-01-04

Summary: LLM/RAG chatbots seem to be a good new way to aid in some types of cultural heritage search. But much more experimentation needed. Also see ethics/environmental/economic costs.

response = graph.invoke({"question": "What was the name of Hogarth's dog?"}) print(response["answer"]) The name of Hogarth's dog was Trump (SystemNumber: O97313). This pug was prominently featured in Hogarth's self-portrait of 1745. Hogarth's combative personality led him to be depicted as Trump in a 1753 caricature by Paul Sandby. response = graph.invoke({"question": "What artworks are associated with greyhounds"}) print(response["answer"]) Two artworks are associated with greyhounds. The first is a painting by Louis Godefroy Jadin (no system number provided), which features greyhounds in a hunting scene. The second is a terracotta statuette of a hound (inv. no. A.10-1954), although it's not clear if it's specifically a greyhound. response = graph.invoke({"question": "Which artworks depict St Dominic with a dog?"}) print(response["answer"]) The artwork that depicts St. Dominic with a dog is a stone and walnut ivory relief made by Diego Reinoso in 1669. The dog, which resembles a Chinese Foo dog, is sitting at the feet of St. Dominic and is one of his main symbols.

(NB the object system number in the first response is the wrong object! -https://collections.vam.ac.uk/item/O97313/figure-unknown/ , it should be https://collections.vam.ac.uk/item/O77828/hogarths-dog-trump-figure-roubiliac-louis-fran%C3%A7ois/)

The desire to find some alterative option for discovery in cultural heritage collection sites beyond the search box seems to have been around since, well, they started. But beyond the addition of faceted search, there hasn't really been any other huge changes to aid in discovery (of course there have been many great one-off projects exploring new options such as adding data visualisation/generous interfaces, but nothing that has become a standard discovery mode used for most collection sites)

So obviously, given this is a blog post in 2025, the answer must be AI ? Well maybe. It seemed worth exploring some options, starting with a conversational AI interface given that chatting with an large language model (LLM) is very fashionable.

To do this requires getting the information we hold in our collection systems into an LLM, so it can respond with the relevant information from our collections and, ideally, not with any information from other sources, and defintely not with AI hallucinations. There seem to be two main approaches for doing this:

Finetuning - Adding in new documents onto a previous tuned LLM

Retrieval Augmented Generation (RAG) - Retrieve selected relevant documents in response to a query and return the result to the user from an LLM applied to those documents and the query.

RAG seems to be the simpler option so I've gone for that for this quick experiment, but finetuning is something that is also worth looking into, although I don't know if the quantity of the data would be enough to make it useful.

There are a lot of tutorials out there on building RAG based chatbots, I've followed one from LangChain in the most part (https://python.langchain.com/docs/tutorials/rag/), but with some modifications as I went through it. The finished Jupyter notebook is here [to be added once I've tidied it up!]. In summary I had to:

Create a CSV file with descriptions of a set of objects. I used a set of V&A museum collection objects that all depict dogs (for, reasons) and took the title and summary description field as well as the museum database system number to identify them.

Create a new prompt (vam/chat-glam - available here for EU usage - https://eu.smith.langchain.com/hub/vam/chat-glam, might need to add it seperately to the USA LangChain Hub, presumably for legal reasons). All the prompt does is try to make it clear the documents are describing artworks and that the answer should give museum system numbers for the objects to guide with searching, but otherwise it's the standard LangChain prompt (rlm/rag-chat)

Pick the right model for the retrieval stage for documents. I got this very wrong for some time and was trying lots of the well known models (LLama3) which brought back mostly irrelevant documents (and took a lot of indexing time in doing so). Eventually I realised (thanks to reading https://www.sbert.net/examples/applications/semantic-search/README.html) that in vector space comparing a short query prompt with a longer text (object description) doesn't really make sense (asymmetric semantic search) without using the right model, so I switched to using all-minilm:l6-v2 (https://ollama.com/library/all-minilm:l6-v2) and that worked much better for results (and faster for indexing). I think there is a lot more that can be done on this.

Otherwise the setup is:

LLM - Mistral AI (free developer access - this could be replaced with ChatOllama for local LLM chat)

Embeddings - Ollama running all-minilm:l6-v2 (remotely on another local computer to try to spread the CPU/Memory load)

Vector Store - FAISS

Orchestration - LangChain

Once all that setup was done it was pretty easy to run and get results from some chat questions (sample at top of post and more in the notebook). Given the dataset I was a bit constrained in the questions (it's really more canine chat than art history chat) but even with this limited and rough implementation I think it showed how for some type of queries a conversational interface could be a useful discovery mode. In particular for asking about:

example objects/object existance (maybe useful for initial exploration, when there is no particular museum object in mind)

summary information (but this only works for subsets of the collection)

short factual statements (to pull out statements from within records, but this is edging towards more dangerous generative AI)

Of course, search/browse based queries would return similiar example objects if the same keywords were searched for, so in this case it's just the difference in the interface as to how this data is returned, but that might be helpful for some users in giving an alternative way to formulate a query.

For summaries, the chat interface does provide something new ('at a glance' results), but it's constrained by the number of results from the retrieval phase passed onto the LLM, and the summary will be based on that, instead of knowledge of the whole collection. For future work, it might be possible to route those type of queries to some other model to be able to ask questions to the collection as a whole.

Returned factual statements is something new but it also brings up concerns around how much context is being given by the LLM in the response, possibly mis-leading the user (and is it correct in it's response, or hallucinating). It might be worth trying this with vocabularly controlled fields only in records rather then free-text to see if this changes the structure of the response.

More posts to follow with testing on some different models/datasets.

Some ideas for further work:

Creating embeddings of all the collection object records would then allow the whole collection to be searched, but this would require some larger compute resource

Exploring the types of query that can and can't be answered by this approach (e.gg. questions about the collection as a whole) and how these questions can be answered by other apporoaches

A huge amount of experimentation is possible on selecting different models and tuning of them

Fine-tuning a model on collection records text

Integrating thumbnail images/object previews into the chat response to give quick access to suggested objects

Digital Cultural Heritage Tutorials and Terminology - 2024 Roundup

2025-01-03

Useful tutorials and new terminology that I have come across this year. This is just a record of the first time I have seen the tutorial or the word(s) or phrase used in some new context, the tutorials may be older than this year and the words may have been around for some time, but all new for me.

Tutorials

Data Visualisation

Data Visualisation tutorials from the great UCLAB at FH Potsdam - https://github.com/uclab-potsdam/datavis-tutorials

Data Wrangling

Data Science at the Command Line - https://jeroenjanssens.com/dsatcl/ - "Welcome to the website of the second edition of Data Science at the Command Line by Jeroen Janssens, published by O’Reilly Media in October 2021. This website is free to use. "

AI

llama.cpp guide - Running LLMs locally, on any hardware, from scratch - https://blog.steelph0enix.dev/posts/llama-cpp-guide/

History of Embeddings - https://vickiboykis.com/what_are_embeddings/index.html

Text Recognition

Automatic Text Recognition - "Explore our video tutorials on Automatic Text Recognition (ATR) and learn how to efficiently extract full text from heritage material images. Perfectly tailored for researchers, librarians, and archivists, these resources not only enhance your archival research and preservation efforts but also unlock the potential for computational analysis of your sources." - https://harmoniseatr.hypotheses.org/

Terminology

Meso-level

If macro-level is looking at a whole set of texts, and micro-level is looking at a single instance, then if you want something in-between, try meso-level: "What such midsize sets of texts with intricate relationships need, is a meso-level approach: neither corpus nor edition"

From: Lit, C. van and Roorda, D. (2024) ‘Neither Corpus Nor Edition: Building a Pipeline to Make Data Analysis Possible on Medieval Arabic Commentary Traditions’, Journal of Cultural Analytics, 9(3). Available at: https://doi.org/10.22148/001c.116372.

(I think the claim is being made for coining the usage here ?)

Ousiometrics

"We define ‘ousiometrics’ to be the quantitative study of the essential meaningful components of an entity, however represented and perceived. Used in philosophical and theological settings, the word ‘ousia’ comes from Ancient Greek ουσ´ια and is the etymological root of the word ‘essence’ whose more modern usage is our intended reference. For our purposes here, our measurement of essential meaning will rest on and by constrained by the map presented by language. We place ousiometrics within a larger field of what we will call ‘telegnomics’: The study of remotely-sensed knowledge through low-dimensional representations of meaning and stories."

Dodds, P.S. et al. (2023) ‘Ousiometrics and Telegnomics: The essence of meaning conforms to a two-dimensional powerful-weak and dangerous-safe framework with diverse corpora presenting a safety bias’. arXiv. Available at: https://doi.org/10.48550/arXiv.2110.06847.

(discovered from: Fudolig, M.I. et al. (2023) ‘A decomposition of book structure through ousiometric fluctuations in cumulative word-time’, Humanities and Social Sciences Communications, 10(1), pp. 1–12. Available at: https://doi.org/10.1057/s41599-023-01680-4. )

Thick data

"Assuming digital scholars share annotations in the widest possible sense, one can envision a scenario where a proliferation of user- and computer-generated annotations referring to a single IIIF canvas can be analyzed as ‘thick data’. This term is adapted for researchers engaging big data for ethnographical study, recognizing the idea of ‘thick description’ in the work of anthropologist Clifford Geertz. Thick description refers to ‘an account that interprets, rather than describes’ (Moore, 2018: 56 citing Geertz, 1977). Elaborated by Paul Moore, a ‘thick data’ approach shows that the ways in which data are used is a cultural rather than a technological problem, emphasizing that ‘all technologies are ultimately subject not only to the needs of the user but also to the context in which they are being used’ (2018: 52). "

Westerby, M.J. (2024) ‘Annotating Upstream: Digital Scholars, Art History, and the Interoperable Image’, Open Library of Humanities, 10(2). Available at: https://doi.org/10.16995/olh.17217.

Frictionless Reproducibility

"As a researcher steeped in the theory, practice, and history of machine learning, I was struck by David Donoho’s (2024) articulation of frictionless reproducibility—evaluation through data, code, and competition—as the core force driving progress in data science.[...] Donoho defines frictionless reproducibility by three aspirational pillars. Researchers should make data easily available and shareable. Researchers should provide easily re-executable code that processes this data to desired ends. Researchers should emphasize competitive testing as a means of evaluation"

Recht, B. (2024) ‘The Mechanics of Frictionless Reproducibility’, Harvard Data Science Review, 6(1). Available at: https://doi.org/10.1162/99608f92.f0f013d4.

Structured Extraction

"where an LLM helps turn unstructured text (or image content) into structured data"

Textpocalypse

"It is easy now to imagine a setup wherein machines could prompt other machines to put out text ad infinitum, flooding the internet with synthetic text devoid of human agency or intent: gray goo, but for the written word."

Kirschenbaum, M. (2023) ‘Prepare for the Textpocalypse’, The Atlantic, 8 March. Available at: https://www.theatlantic.com/technology/archive/2023/03/ai-chatgpt-writing-language-models/673318/ (Accessed: 1 January 2025).

(and Wulf, K. (2023) Textpocalypse: A Literary Scholar Eyes the ‘Grey Goo’ of AI, The Scholarly Kitchen. Available at: https://scholarlykitchen.sspnet.org/2023/04/13/textpocalypse-a-literary-scholar-eyes-the-grey-goo-of-ai/ (Accessed: 1 January 2025). )

Alignment Ribbons

"The horizontal alignment ribbon (henceforth simply "alignment ribbon") can be understood (and modeled) variously as a graph, a hypergraph, or a tree, and it also shares features of a table. We find it most useful for modeling and visualization to think of the alignment ribbon as a linear sequence of clusters of clusters, where the outer clusters are alignment points and the inner clusters (except the one for missing witnesses) are groups of witness readings (sequences of tokens) that would share a node in a traditional variant graph"

Birnbaum, D.J. and Dekker, R.H. (2024) ‘Visualizing textual collation’, in. Balisage: The Markup Conference. Available at: https://balisage.net/Proceedings/vol29/print/Birnbaum01/BalisageVol29-Birnbaum01.html (Accessed: 2 January 2025).

Opisthograph

(as I understand it, an early manuscript/scroll with writing on both sides ?)

slop

AI generated low-quality text.

Too many citations to mention.

Digital Cultural Heritage Papers - 2024 Roundup

2025-01-03

A roundup of papers, essays, articles, book, blogposts, reports etc published this year I've read (ok, sometimes just scanned) for topics I'm currently interested in, i.e. mostly digital cultural heritage or AI/web/computing related. And some random other stuff.

I reserve the right to return in the future to add other papers published this year that I missed, as my interests change. There are also many other papers I should have added here from earlier in the year, I may add more if I have another burst of enthusiam to trawl through my Zotero.

Infrastructure

Christopher Smith - On funding arts and humanities infrastructure in the UK (with a teaser for 2025 plans!) - https://anatomiesofpower.wordpress.com/2024/12/31/funding-arts-and-humanities/

Waters, D.J. (2023) ‘The emerging digital infrastructure for research in the humanities’, International Journal on Digital Libraries, 24(2), pp. 87–102. Available at: https://doi.org/10.1007/s00799-022-00332-3.

Peter Wells The National Data Library should help people deliver trustworthy data services (Dec 2024) - https://peterkwells.com/2024/12/18/the-national-data-library-should-help-people-deliver-trustworthy-data-services/

Linked Data

Sanderson, R. (2024) ‘Implementing Linked Art in a Multi-Modal Database for Cross-Collection Discovery’, Open Library of Humanities, 10(2). Available at: https://doi.org/10.16995/olh.15407.

Data Wrangling

Beyond HTTP APIs: the case for database dumps in Cultural Heritage - https://literarymachin.es/beyond-api-data-dumps/

Computational Text Analysis

Lit, C. van and Roorda, D. (2024) ‘Neither Corpus Nor Edition: Building a Pipeline to Make Data Analysis Possible on Medieval Arabic Commentary Traditions’, Journal of Cultural Analytics, 9(3). Available at: https://doi.org/10.22148/001c.116372.

(conf paper anstract) "Exploring Zero-Shot Named Entity Recognition in Multilingual Historical Travelogues Using Open-Source Large Language Models" - https://clin34.leidenuniv.nl/abstracts/exploring-zero-shot-named-entity-recognition-in-multilingual-historical-travelogues-using-open-source-large-language-models/

AI

DeepMind - A new golden age of discovery - https://deepmind.google/public-policy/ai-for-science/ - "In this essay, we take a tour of how AI is transforming scientific disciplines from genomics to computer science to weather forecasting. Some scientists are training their own AI models, while others are fine-tuning existing AI models, or using these models’ predictions to accelerate their research"

ODI A data for AI taxonomy - https://theodi.org/news-and-events/blog/a-data-for-ai-taxonomy/ - "[...] we set out to develop a taxonomy of the data involved in developing, using and monitoring foundation AI models and systems. It is a response to the way that the data used to train models is often described as if a static, singular blob, and to demonstrate the many types of data needed to build, use and monitor AI systems safely and effectively."

A Large Language Model walks into an archive... https://cblevins.github.io/posts/llm-primary-sources/

VLM Art Analysis by Microsoft Florence-2 and Alibaba Cloud Qwen2-VL - https://huggingface.co/blog/PandorAI1995/vlm-art-analysis-by-florence-2-b-and-qwen2-vl-2b

OCR Processing and Text in Image Analysis with Florence-2-base and Qwen2-VL-2B - https://huggingface.co/blog/PandorAI1995/ocr-processing-text-in-image-analysis-vlm-models

OpenAI - Introducing SimpleQA - "Factuality is a complicated topic because it is hard to measure—evaluating the factuality of any given arbitrary claim is challenging, and language models can generate long completions that contain dozens of factual claims. In SimpleQA, we will focus on short, fact-seeking queries, which reduces the scope of the benchmark but makes measuring factuality much more tractable." - https://openai.com/index/introducing-simpleqa/

Digital Humanities

The Bloomsbury Handbook to the Digital Humanities - https://www.bloomsbury.com/uk/bloomsbury-handbook-to-the-digital-humanities-9781350452572/#

Digital Editions

On Automating Editions The Affordances of Handwritten Text Recognition Platforms for Scholarly Editing - https://scholarlyediting.org/issues/41/on-automating-editions/

3D Printing

Volpe, Y. et al. (2014) ‘Computer-based methodologies for semi-automatic 3D model generation from paintings’, International Journal of Computer Aided Engineering and Technology, 6(1), p. 88. Available at: https://doi.org/10.1504/IJCAET.2014.058012.

Web Development

"[...] this investigation into JavaScript-first frontend culture and how it broke US public services has been released in four parts." - https://infrequently.org/2024/08/the-landscape/

Conference Proceedings

SWIB24 - Semantic Web in Libraries - https://swib.org/swib24/programme.html

Computational Humanities Research CH 2024 - https://ceur-ws.org/Vol-3834/

Vis4DH 2024 - Didn't happen ?

Journals

New Journals I've come across (or re-discovered):

Interdisciplinary Digital Engagement in Arts & Humanities (IDEAH) - https://ideah.pubpub.org/

Public Humanities - https://www.cambridge.org/core/journals/public-humanities

RIDE - "RIDE is an open access review journal dedicated to digital editions and resources" - https://ride.i-d-e.de/

DH Benelux Journal - "DH Benelux Journal is the official journal of the DH Benelux community, which fosters collaboration between researchers in the digital humanities in Belgium, Luxembourg and the Netherlands. " - https://journal.dhbenelux.org/

Journal of Open Research Software - "The Journal of Open Research Software (JORS) features peer reviewed Software Metapapers describing research software with high reuse potential." - https://openresearchsoftware.metajnl.com/

Transformations - A DARIAH Journal is a multilingual journal created in 2024 by the European research infrastructure DARIAH ERIC. This journal is an ongoing publication with thematic issues in Digital Humanities, humanities, social sciences, and the arts. The journal is particularly interested in the use of digital tools, methods, and resources in a reproducible approach. It welcomes scientific contributions on collections of data, workflows and software analysis. - https://transformations.episciences.org/

Digital Cultural Heritage Tools - 2024 Roundup

2024-12-30

Continuing my roundup of resources from last year. This time, tools, platforms, standards and other types of useable resources mostly relating to digital cultural heritage but sometimes more general.

AI

Croissant Format - "The Croissant metadata format simplifies how data is used by ML models. It provides a vocabulary for dataset attributes, streamlining how data is loaded across ML frameworks such as PyTorch, TensorFlow or JAX. In doing so, Croissant enables the interchange of datasets between ML frameworks and beyond, tackling a variety of discoverability, portability, reproducibility, and responsible AI (RAI) challenges." - https://docs.mlcommons.org/croissant/docs/croissant-spec.html

Ai2 OpenScholar - "To help scientists effectively navigate and synthesize scientific literature, we introduce Ai2 OpenScholar—a collaborative effort between the University of Washington and the Allen Institute for AI. OpenScholar is a retrieval-augmented language model (LM) designed to answer user queries by first searching for relevant papers in the literature and then generating responses grounded in those sources. Below are some examples:" - https://allenai.org/blog/openscholar

chonkie - The no-nonsense RAG chunking library that's lightweight, lightning-fast, and ready to CHONK your texts - https://github.com/chonkie-ai/chonkie

AI4Culture - "AI4Culture is designed to provide comprehensive training and resources for individuals and institutions interested in applying Artificial Intelligence (AI) technologies in the cultural heritage sector. It aims to empower users with the knowledge and skills needed to leverage AI for preserving, managing, and promoting cultural heritage." - https://ai4culture.eu/

AI Risk Repository - "A comprehensive living database of over 700 AI risks categorized by their cause and risk domain." - https://airisk.mit.edu/

Cultural Heritage Collections Visualisation

Collection Space Navigator - https://datalab.allardpierson.nl/meet_the_data/01_Explore%20the%20Collections.html - Allard Pierson, University of Amsterdam

InTaVia platform for "[...] supports the practices of data retrieval, creation, curation, analysis, and communication with coherent visualization support for multiple types of entities. We illustrate the added value of this open platform for storytelling with four case studies, focusing on (a) the life of Albrecht Dürer (person biography), (b) the Saliera salt cellar by Benvenuto Cellini (object biography), (c) the artist community of Lake Tuusula (group biography), and (d) the history of the Hofburg building complex in Vienna (place biography)." - https://intavia.eu/

Cultural Heritage Cataloguing

Dédalo - https://dedalo.dev/the_project#dd1100_62 - "Dédalo is a project focused on the field of digital humanities, on the need to analyze Cultural Heritage with digital tools, allowing machines to understand the cultural, social, and historical processes that generate Heritage and Memory. " - Project running since 1998

Textual Analysis

Coconut Libtool - https://www.coconut-libtool.com/ - "All-in-one data mining and textual analysis tool for everyone." - A sort of Voyant Tools/Palladio web based tool for analysis of CSV type files

CEDAR - https://voices.uchicago.edu/cedar/ - "CEDAR (Critical Editions for Digital Analysis and Research) is a multi-project digital humanities initiative in which innovative computational methods are employed in textual studies. " -

COLaF - "Through the COLaF project (Corpus et Outils pour les Langues de France, Corpus and Tools for the Languages of France), Inria aims to contribute to the development of free corpora and tools for French and other languages of France[...]" - https://colaf.huma-num.fr/

TextFrame - "The ITF specification is intended to facilitate systematic referencing and reuse of textual resources in repositories in a manner that is both user- and machine-friendly." - https://textframe.io/

Text Recognition

HTR-United - "HTR-United is a catalog that lists highly documented training datasets used for automatic transcription or segmentation models. HTR-United standardizes dataset descriptions using a schema, offers guidelines for organizing data repositories, and provides tools for quality control and continuous documentation" - https://htr-united.github.io/index.html

Data Wrangling

Invisible XML - "Invisible XML (ixml) is a method for treating non-XML documents as if they were XML, enabling authors to write documents and data in a format they prefer while providing XML for processes that are more effective with XML content." - https://invisiblexml.org/

Linked Data

grlc - https://grlc.io/ - "grlc makes all your Linked Data accessible to the Web by automatically converting your SPARQL queries into RESTful APIs. With (almost) no effort!"

Visual Analysis

AIKON - https://aikon-platform.github.io/ - "Aikon is a modular platform designed to empower humanities scholars in leveraging artificial intelligence and computer vision methods for analyzing large-scale heritage collections. It offers a user-friendly interface for visualizing, extracting, and analyzing illustrations from historical documents, fostering interdisciplinary collaboration and sustainability across digital humanities projects. Built on proven technologies and interoperable formats, Aikon's adaptable architecture supports all projects involving visual materials. "

Publishing

Edition Crafter - https://editioncrafter.org/ - An open source and customizable publishing tool, EditionCrafter allows users to easily publish digital editions as feature-rich and sustainable static sites.

Manuscripts

VisColl received a new grant from NEH to fund the VCEditor 2.0 - https://viscoll.org/2024/08/28/vceditor-2-0-has-received-an-neh-digital-humanities-advancement-grant/ - "The grant will support work undertaken by staff in the Schoenberg Institute for Manuscript Studies and the Penn Libraries Digital Library Development team. This funding will support the continued development of VCEditor functionality[...]"

Modelling

ComSES Network - "an international community and cyberinfrastructure to support transparency and reproducibility for computational models & their digital context + educational resources and FAQ's for agent based modeling" - https://www.comses.net/

Databases

GQL: A New ISO Standard for Querying Graph Databases - https://thenewstack.io/gql-a-new-iso-standard-for-querying-graph-databases/

GeoSpatial

OSM Buildings - Free and open source web viewer for 3D buildings - https://osmbuildings.org/documentation/viewer/

Old Maps Online - https://www.oldmapsonline.org

Digitisation

Arkindex -"[...]our platform for managing and processing large collections of digitized documents[...]" - https://teklia.com/blog/arkindex-goes-open-source/

Environmental

Digital Humanities Climate Coalition Toolkit - https://sas-dhrh.github.io/dhcc-toolkit/

Archives

EAD 2002 XML/EAC-CPF to Records in Context (RiC-O) converter - https://github.com/ArchivesNationalesFR/rico-converter

Random

Is my blue your blue? - https://ismy.blue/

EZ-Tree - Procedural tree generator - https://github.com/dgreenheck/ez-tree

Digital Cultural Heritage Projects - 2024 Roundup

2024-12-30

An attempt to record new research projects, research infrastructures, websites, etc I've come across this year to try to keep track of them, hopefully of use to others but also for my own selfish reasons to free up space in my poor crowded brain (and reduce web browser tabs). It's also because I've found it hard to note them otherwise, it's surprising to me there is still no good way I know of to record projects in progress (to keep track of them); any upcoming conferences (for the call for papers, and then to read proceedings) and for journals of interest (CFP/published). There must be someone working on the Zotero equivalent for these standard workflows of research ?

I'm hoping for this to be an annual tradition (tradition in the sense that this is the first time I've done it and who knows, I may do it again next year). But given the 8 year gap between this blog post and the previous one, the odds are not great)

Other posts will follow with new or updated research related tools, another with articles/reports of particular interest, and then (most importantly?) any new terminology I've come across this year, again mostly to help myself having to look up for the umpteenth time what 'meso-level' actually means.

Project Outputs

These projects may have been around for a long time but I have possibly just discovered their outputs (i.e. the website with the results of the projects work or some in-progress publication/media).

Mapping Color in History - https://mappingcolor.fas.harvard.edu/ - "Mapping Color in History™ is a searchable database of pigment analysis in Asian paintings"

Language of Bindings - https://www.ligatus.org.uk/lob/ - "The Language of Bindings Thesaurus (LoB) includes terms which can be used to describe historical binding structures."

CATALOG of DISTINCTIVE TYPE (CDT), Restoration England (1660-1700) - https://cdt.library.cmu.edu

Sloane Lab Knowledge Base - https://knowledgebase.sloanelab.org/resource/Start - "The Sloane Lab Knowledge Base is an interactive portal reuniting the collections of Sir Hans Sloane, which consist of the founding collections of the British Museum, the Natural History Museum and the British Library. It is still in development, with more features being built and datasets in our ingestion pipeline. The Sloane Lab Knowledge Base enables the cross-searching and investigation of object, records, people and places in the Sloane Collections. The Sloane Lab Knowledge Base makes also possible the crosslinking of Sloane’s historical manuscript catalogue entries with contemporary database records found in museum collections today. "

imagineRio - "A searchable digital atlas that illustrates the social and urban evolution of Rio de Janeiro, as it existed and as it was imagined. Views of the city created by artists, maps by cartographers, and site plans by architects or urbanists are all located in both time and space. It is a web environment that offers creative new ways for scholars, students, and residents to visualize the past by seeing historical and modern imagery against an interactive map that accurately presents the city since its founding." - https://www.imaginerio.org/en

Zamani Project - "Zamani Project undertakes data collection and analysis, heritage communication, and training and capacity building for experts and the public so that they have access to high-quality spatial heritage data, and can learn from, conserve, and protect heritage." - https://zamaniproject.org/

Data/Culture - "Data/Culture is a sandbox for the re-use of data and tools in the humanities and arts, in ways that develop high-quality research and strong collaborative communities. " - https://www.turing.ac.uk/research/research-projects/dataculture-building-sustainable-communities-around-arts-and-humanities

A Gamification Approach to Literary Network Analysis - Battle of the Plays playing cards (!) - https://sukultur.de/produkt/battle-of-the-plays-a-gamification-approach-to-literary-network-analysis/ (why don't more research projects create games from their outputs!)

Project Announcements

Infrastructure

ECHOES - https://www.echoes-eccch.eu/ - "The Cultural Heritage Cloud (ECCCH) is a shared platform designed to provide heritage professionals and researchers with access to data, scientific resources, training, and advanced digital tools tailored to suit their needs" - Part of the Cultural Heritage Cloud Horizon Infrastructure funding.

Manuscripts

LostMA - ‘LostMa: The Lost Manuscripts of Medieval Europe: Modelling the Transmission of Texts | École Nationale Des Chartes - PSL’. Accessed 30 December 2024. https://www.chartes.psl.eu/en/research/centre-jean-mabillon/research-projects/lostma-lost-manuscripts-medieval-europe-modelling-transmission-texts. - Jan 2024 - Dec 2028 - Ecole nationale des Chartes - France

INSULAR - https://le.ac.uk/news/2024/april/insular-project - "The European Research Council (ERC) has awarded a prestigious Advanced Grant, for €2.5m, to an ambitious new project to study early medieval manuscripts made in Britain and Ireland between AD 600–900." - Institutions: University of Leicester, University of Göttingen, Bodleian Library, University of Cambridge, Trinity College Dublin, BNF, Det Kongelige Bibliotek, Leuven University -

DISCOVER - https://erc-discover.github.io/ - "Our goal is to develop approaches to assist experts in identifying and analyzing patterns. Indeed, while the success of deep learning on visual data is undeniable, applications are often limited to the supervised learning scenario where the algorithm tries to infer a label for a new image based on the annotations made by experts in a reference dataset."

STEMMA - "This project develops and applies a data-driven approach in order to provide the first macro-level view of the circulation of early modern English poetry in manuscript. It focuses on English verse manuscripts written and used between the introduction of printing in England in 1475 and 1700, by which time the rapid changes in both literary taste and publishing norms ushered in by the Restoration had fully transformed literary culture. The project includes manuscripts circulating in England and anywhere else English was spoken and read, including Ireland, the North American colonies, and continental exile communities." - https://stemma.universityofgalway.ie/

AI

Project StoryMachine - https://www.dfg.de/en/news/news-topics/announcements-proposals/2024/ifr-24-110 - Exploring Implications of Recommender Based Spatial Hypertext Systems for Folklore and the Humanities - Hochschule Hof, Ludwig-Maximilians-Universität München, Universität Regensburg, University of London

Developing a public-interest training commons of books - https://www.authorsalliance.org/2024/12/05/developing-a-public-interest-training-commons-of-books/

Project Updates

(i.e. ongoing projects I've discovered this year or re-discovered after some announcement)

Network Analysis

DISSINET - https://dissinet.cz/ - "The “Dissident Networks Project” (DISSINET), hosted at Masaryk University’s Centre for the Digital Research of Religion, is a research initiative exploring dissident and inquisitorial cultures in medieval Europe from the perspective of social network analysis, geographic information science, and computational text analysis"

AI

RePAIR Project - https://www.repairproject.eu/ - "an acronym for Reconstructing the Past: Artificial Intelligence and Robotics meet Cultural Heritage. State-of-the-art technology will, for the first time, be employed in the physical reconstruction of archaeological artefacts, which are mostly fragmentary and difficult to reassemble."